Competition Results Summary

Mar. 2015

Dongsoo Han, KAIST

Recently, companies and research groups are actively developing their own indoor positioning systems and announcing their accuracies. However the performance of the systems can hardly be taken as it is because the evaluation is mostly performed by the developers themselves. In fact, the performance of indoor positioning system is influenced by various conditions such as signal environments, building structures, and the density of collected fingerprints, etc. Moreover, the evaluation processes are not consistent with each other. It would be desirable if we can have a well-defined performance evaluation process for indoor positioning systems. It will be much better if the positioning systems can be evaluated at the same place with the same conditions. In that sense, a competition, which is held at a place with all of the participants, is a good opportunity for the participants to evaluate their systems and compare the results with the techniques and systems of other participants.

Purpose of Competition:

The purpose of the IPIN 2014 competition was manifold. We wanted to establish a well-agreed performance evaluation method for indoor positioning systems as well as to provide opportunities for the participants to learn about the evaluating methods of positioning systems. In addition, we expected to experience many positioning techniques and compare the advantages and disadvantages of various positioning techniques.

Issues of Evaluation:

We faced with several issues in preparing the competition. Deciding the evaluation criteria was the first thing to be considered and announced to the competitors. Although there are many already known quantitative and qualitative evaluation criteria for indoor positioning system, we chose the accuracy as the major criterion for the simplicity and fairness of the evaluation. We decided to use 75 percentile CDF as the score for the ranking. Signal environment was the second thing to be considered. Since the Bexco competition area was full filled with enough WiFi signals, WiFi signals was allowed to be used for competition. Vision was also allowed to be used because no infrastructure was required for the vision. Sensors embedded in smartphones such as 3-axis accelerometer and gyroscope could be used for the incorporation of pedestrian dead reckoning (PDR) techniques.

Preparations for Competition:

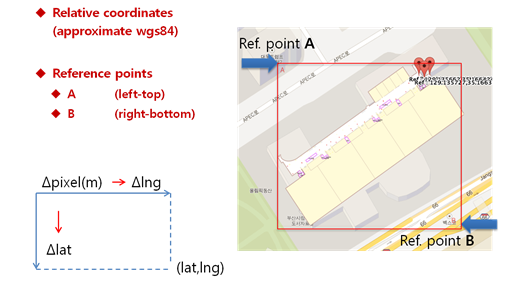

Many things should be prepared for the competition. The first thing required for the competition was to determine the evaluation areas and prepare the maps of the areas. We chose the Bexco exhibition center, Busan, Korea because the IPIN 2014 conference was scheduled to be held there. We distributed the map image of Bexco buildings along with the top-left and bottom-right corner coordinates so that the competitors could test their systems with the map in advance (see Figure 1). The map was distributed to the competitors through e-mail, IPIN 2014 web site, and KAILOS site.

Figure 1. The format of the distributed indoor maps.

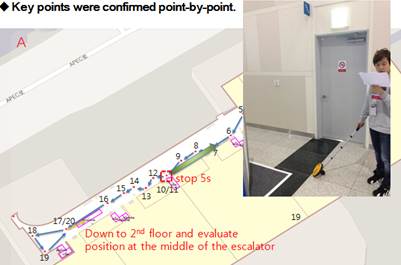

The second thing required for the competition was to determine the evaluation paths and the points on which the evaluation data to be collected. Straight lines, S-curves, stay, and loops were combined in order to simulate the natural movements of users in indoor environments. Figure 2 shows a part of planned evaluation paths and the points. The evaluation paths and points were disclosed on the time when the evaluation started to prevent the competitors from adapting their positioning system to the evaluation paths and points.

Figure 2. Confirming the evaluation paths and points.

The third thing we prepared was to implement interfaces and software modules to collect data from competitors' positioning systems. The interfaces and software modules were distributed to the competitors for test in advance. The competitors could confirm that the evaluation of their systems at Bexco would be find by checking the connections between their systems and the distributed software modules.

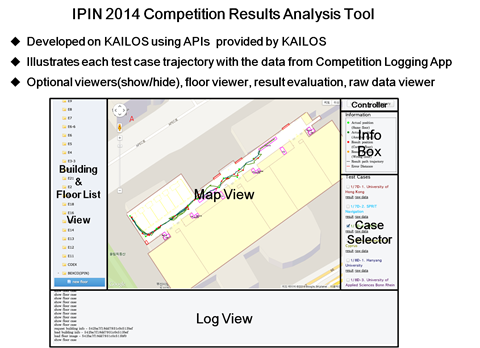

The last thing we prepared was to develop a result analysis tool which also visualizes the results in CDF graphs, and the error distances in lines on the paths (see Figure 3). Thanks to the tool, we could rank the positioning results in a couple hours right after the data collection activity.

Figure 3. Competition results analysis tools and interfaces.

Evaluation Process:

The evaluation points were physically marked with sticky colored tape on the evaluation paths early in the morning of the competition day so that the evaluators and competitors may not pass by the evaluation points without collecting data during the evaluation. Moreover, a couple of volunteers escorted the evaluators and competitors to follow the planned paths. The evaluators and competitors were guided to press the buttons of evaluation app at every evaluation point on the paths. (see the TPC guidelines for more details)

Evaluation for each system was performed twice: the first time, by an evaluator, and the second, by the competitor. Each evaluation took about 20 minutes because the total length of the evaluation path was around 600 meters. Around 10 evaluators voluntarily participated in the evaluation after attending a short instruction class for the evaluation.

Evaluation Results:

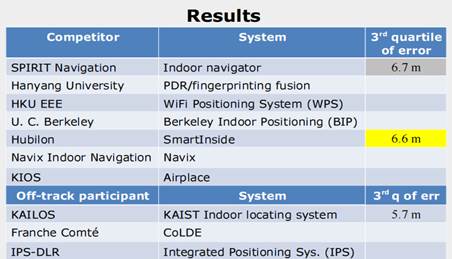

Around 10 teams from 7 countries participated in the competition. The competition was performed in two tracks: one for competition track, another for non-competition track due to some reasons. The following table shows the results of the competition. Only the two top ranked teams in the competition track, and one top ranked team in the non-competition track agreed to disclose the detailed results. Figure 4 is the summary of the competition results.

Figure 4. IPIN 2014 Competition Results.

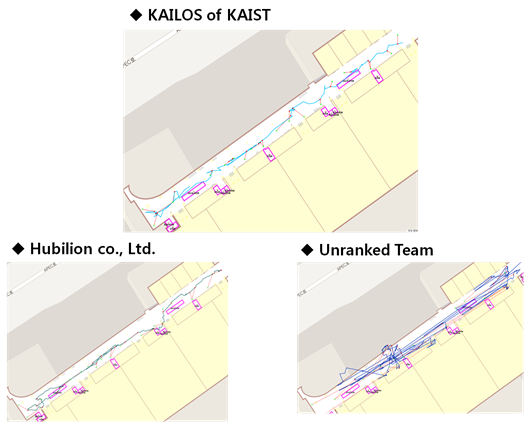

Figure 5 contrasts the trajectories of KAILOS of KAIST, SmartInside of Hubilon, and an unranked team. As seen in the figure, the accuracy difference can be confirmed with the visualization tools.

Figure 5. The trajectory visualization with the result analysis tool.

Lessons Learned from the Competition:

We learned many lessons from the competition. First, the competitors themselves confirmed the weak or strong points of their positioning systems comparing with other systems. They could also learned a more practical way of evaluating the performance of their positioning systems by participating the competition. In addition, the competition provided opportunities for competitors to compare the pros and cons of various indoor methods such as WLAN-based, PDR-based, and vision-based methods.

Despite many things had been prepared by the competition management team including maps, evaluation paths and points, etc., some competitors had troubles during evaluation. For example, some competitors had a trouble in sending their data to the evaluation server. Fortunately this trouble was addressed by the competition managing team right away. One critical error made by one competitor was the mistaken of longitude into latitude and latitude into longitude. Unfortunately this error was found after the competition.

One thing, we should not miss to mention is that there are so many things to be considered to make the competition more fair and complete. This time, we considered only the accuracy due to lack of time and experiences. Therefore, we should not simply conclude that one positioning system is inferior to others just because it showed slightly worse performance in accuracy in this competition.